Discord Bot that Uses LLM to Access User Statistics

Posted on: 2025-07-15

I own a Discord server where I have over 140 members. I have a bot that perform several automation like role assignation, finding games for the users, provide daily statistics, and more. The latest feature gives the user the ability to ask questions about their statistics using a large language model (LLM). The bot uses the Gemini 2.5 Flash to access the LLM and provide answers based on the user's data.

The bot is written in Python and uses the discord.py library to interact with the Discord API. The LLM integration is done using the google.generativeai library, which allows us to send prompts to the Gemini model and receive responses. The choice to Google Gemini was because the API does not require credit card and has a free tier that is unlimited in usage. This is perfect for a hobby project like mine.

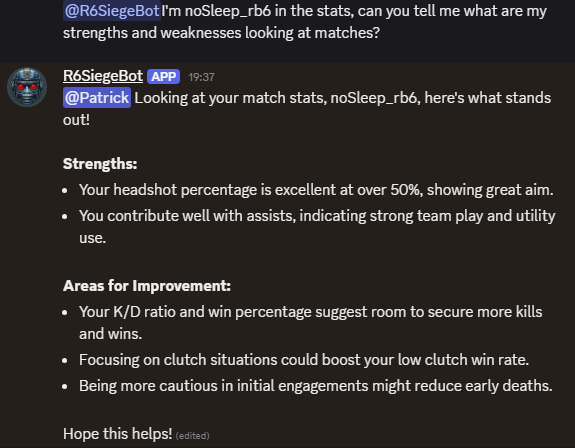

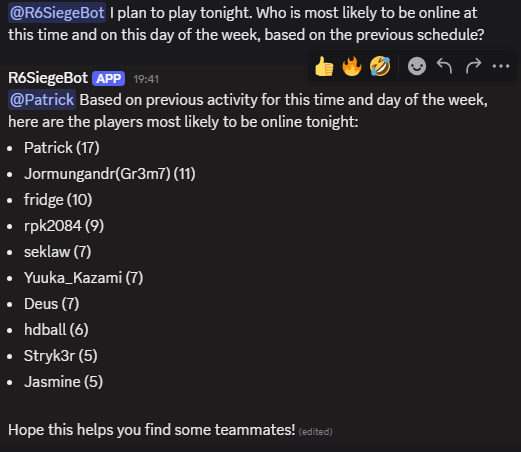

How it Looks

Here are few examples of how the bot works:

How it Works

There are a few details that are important. The first one is that Discord will block and not send a heartbeat if you do a long query. The LLM API might take 20 seconds, which blocks the whole bot. Hence, I used the asyncio library to run the LLM query in a separate thread.

response = await asyncio.to_thread(

lambda: asyncio.run(

generate_answer_when_mentioning_bot(

context, message.content, message.author.display_name, message.author.id

)

)

)

To give some awareness of the conversation around what a user might ask. I always include the prompt with the context of the previous messages. I used a few messages before the message that the bot replied to. If the bot was mentioned, I also added the few latest messages from the channel mentioned.

The rest of the prompt is enhanced depending on some keywords. For example, if the user asks about statistics, I have a function that adds all the schema of the statistics tables to the prompt to create an SQL query, which I then perform upon receiving the reply. If no results are found or if there is a SQL query error, that information is sent back to the LLM for another iteration. I limit the iterations to 4 to avoid infinite loops.

try_count = 0

context = "You are a bot that is mentioned in a Discord server. You need to answer to the user who mentioned you."

context += "You should not mention anything about your name or your purpose, just answer the question."

context += "Here is the context of some previous message that might help you craft the best response:"

context += "Previous messages: " + context_previous_messages

result_sql = ""

sql_context = message_user

while try_count < 4 and result_sql == "":

# Ask Gemini to generate a SQL query to fetch stats from the database

sql_from_llm = ask_gemini_sql_for_stats(sql_context, user_id)

if sql_from_llm is not None and sql_from_llm != "":

print_log(f"SQL query generated by Gemini: {sql_from_llm}")

clean_response = sql_from_llm.strip().replace("```sql", "").replace("```", "")

try:

result_sql = data_access_execute_sql_query_from_llm(clean_response)

except Exception as e:

context += "Your failed with this SQL error: " + str(e) + "\nPlease try again with a different query."

continue

print_log(f"SQL query result: {result_sql}")

if result_sql != "":

context += (

"You can use that information from our database to complete your answer: SQL Queries fields:\n"

)

context += sql_from_llm

context += "SQL Query result:\n"

context += result_sql

else:

sql_context += (

"The previous SQL query you provided did not return any result. Please try again with a different query. Here is what you provided: "

+ clean_response

)

try_count += 1

context += "User question:" + message_user

u = user_display_name.lower()

try:

response = await ask_gemini_async(context)

except Exception as e:

print_error_log(f"Error while asking Gemini: {e}")

return ""

return response

The ask_gemini_sql_for_stats function adds the schema and fields. Fortunately, I have the table name and the most common fields in variables!

def ask_gemini_sql_for_stats(message_user: str, user_id: int) -> Union[str, None]:

"""

Ask Gemini to generate a SQL query for stats based on the user message.

"""

need_sql = False

context = "You are a bot that is asked to generate a SQL query to fetch stats from a database."

context += "The user id: " + str(user_id)

context += "The user question is: " + message_user

context += "Generate a SQL query that fetches data that is relevant to the user question."

context += "The query should be valid and should not return any error when executed. To do wrap the response with"

context += "The query should probably use aggregation functions like COUNT, SUM, AVG, MAX, MIN, etc. to avoid large result sets."

context += (

"The query should be in the format of a string that can be executed in Python and compatible with SQLite 3.45."

)

context += "Do not mention anything about the request or database schema, only return the SQL query and only SELECT query is acceptable."

if "stats" in message_user.lower() or "match" in message_user.lower() or "data" in message_user.lower():

context += f"Table name `{KEY_USER_FULL_MATCH_INFO}`"

context += "The fields: " + SELECT_USER_FULL_MATCH_INFO.replace(KEY_USER_FULL_MATCH_INFO + ".", "")

context += f"Table name `{KEY_USER_FULL_MATCH_INFO}`"

context += "The fields: " + SELECT_USER_FULL_STATS_INFO.replace(KEY_USER_FULL_MATCH_INFO + ".", "")

need_sql = True

if "tournament" in message_user.lower() or "bet" in message_user.lower():

context += f"Table name `{KEY_TOURNAMENT}`"

context += "The fields: " + SELECT_TOURNAMENT.replace(KEY_TOURNAMENT + ".", "")

context += f"Table name `{KEY_USER_TOURNAMENT}`"

context += "The fields: " + SELECT_USER_TOURNAMENT.replace(KEY_USER_TOURNAMENT + ".", "")

context += f"Table name `{KEY_TOURNAMENT_GAME}`"

context += "The fields: " + SELECT_TOURNAMENT_GAME.replace(KEY_TOURNAMENT_GAME + ".", "")

context += f"Table name `{KEY_TOURNAMENT_TEAM_MEMBERS}`"

context += "The fields: " + SELECT_TOURNAMENT_TEAM_MEMBERS.replace(KEY_TOURNAMENT_TEAM_MEMBERS + ".", "")

context += f"Table name `{KEY_bet_user_game}`"

context += "The fields: " + SELECT_BET_USER_GAME.replace(KEY_bet_user_game + ".", "")

context += f"Table name `{KEY_bet_user_tournament}`"

context += "The fields: " + SELECT_BET_USER_TOURNAMENT.replace(KEY_bet_user_tournament + ".", "")

context += f"Table name `{KEY_bet_game}`"

context += "The fields: " + SELECT_BET_GAME.replace(KEY_bet_game + ".", "")

context += f"Table name `{KEY_bet_ledger_entry}`"

context += "The fields: " + SELECT_LEDGER.replace(KEY_bet_ledger_entry + ".", "")

need_sql = True

if "time" in message_user.lower() or "date" in message_user.lower() or "schedule" in message_user.lower():

context += f"Table name `{KEY_USER_ACTIVITY}`"

context += "The fields: " + USER_ACTIVITY_SELECT_FIELD.replace(KEY_USER_ACTIVITY + ".", "")

context += f"The field above has the field event that can be '{EVENT_CONNECT}' or '{EVENT_DISCONNECT}' which can be used to know when someone was online between a period of time"

need_sql = True

if not need_sql:

return ""

# All the time

context += f"Table name `{KEY_USER_INFO}`"

context += "The fields: " + USER_INFO_SELECT_FIELD.replace(KEY_USER_INFO + ".", "")

try:

response = ask_gemini(context)

return response

except Exception as e:

print_error_log(f"Error while asking Gemini for SQL query: {e}")

return ""

Reactions

The addition of the bot was a very big success. People were asking a lot of questions about their statistics or who is better than them or what they should do to improve. The bot is not perfect, but it's a great addition to the server, helping people understand their statistics better while also providing a fun experience.

Future

I have been learning LangChain/LangGraph in the last few days, and I plan to improve the current basic flow of the Python Discord bot with the agentic feature. I can already see that gathering the correct information from the database, generating the SQL query, and then executing it can be done in a more structured way using LangChain. This will allow me to create more complex flows and interactions with the bot, making it even more powerful and useful for the users. Perhaps incorporating visual graphs and other outputs could be implemented in the future.