How to boost caching performance to cache Entity Framework object

Posted on: 2016-10-12

Entity Framework objects are dangerous for caching because of their nature to keep references to object. If you have an object that contains a list of object that can contain back the initial object, you come in a scenario where you have a infinite deepness of reference. While this is not a problem in memory, since it's just pointer. However, if you serialize, it can be problematic. Json.Net provides some way to serialize reference which will serialize once and then refer to the object by a $ref id. However, this can still be expensive because the framework needs to navigate through the objects tree to determine if or not it needs more serialization. Another way to optimize the serialization with Json.Net is to have a custom ContractResolver where you can evaluate the level of deepness you are and stop serializing. The reference + custom ContractResolver looks like this:

public static class Serialization {

public static string Serialize<T>(T objectToSerialize, int maxDepth = 5) {

using (var performanceLog = new GlimpseCodeSection("Serialize")) {

using (var strWriter = new StringWriter()) {

using (var jsonWriter = new CustomJsonTextWriter(strWriter)) { Func<bool> include = () => jsonWriter != null && jsonWriter.CurrentDepth <= maxDepth; var resolver = new DepthContractResolver(include); var serializer = new JsonSerializer(); serializer.Formatting = Formatting.Indented; serializer.ContractResolver = resolver; serializer.ReferenceLoopHandling = ReferenceLoopHandling.Serialize; serializer.PreserveReferencesHandling = PreserveReferencesHandling.Objects; serializer.TypeNameHandling = TypeNameHandling.All; serializer.ConstructorHandling = ConstructorHandling.AllowNonPublicDefaultConstructor; serializer.NullValueHandling = NullValueHandling.Include; serializer.Serialize(jsonWriter, objectToSerialize); }

return strWriter.ToString();

}

}

}

public static T Deserialize<T>(string objectSerialized) {

using (var performanceLog = new GlimpseCodeSection("Deserialize")) {

var contractResolver = new PrivateResolver();

var obj = JsonConvert.DeserializeObject<T>(objectSerialized , new JsonSerializerSettings { ReferenceLoopHandling = ReferenceLoopHandling.Serialize, PreserveReferencesHandling = PreserveReferencesHandling.Objects, TypeNameHandling = TypeNameHandling.All, ConstructorHandling = ConstructorHandling.AllowNonPublicDefaultConstructor, ContractResolver = contractResolver, NullValueHandling = NullValueHandling.Include });

return obj;

}

}

/// <summary>

/// Allow to have private method to be written in the serialization

/// </summary>

public class PrivateResolver : DefaultContractResolver {

protected override JsonProperty CreateProperty(MemberInfo member, MemberSerialization memberSerialization) {

var prop = base.CreateProperty(member, memberSerialization);

if (!prop.Writable) {

var property = member as PropertyInfo;

if (property != null) {

var hasPrivateSetter = property.GetSetMethod(true) != null;

prop.Writable = hasPrivateSetter;

}

}

return prop;

}

}

public class DepthContractResolver : DefaultContractResolver {

private readonly Func<bool> includeProperty;

public DepthContractResolver(Func<bool> includeProperty) { this.includeProperty = includeProperty; }

protected override JsonProperty CreateProperty(MemberInfo member, MemberSerialization memberSerialization) {

var property = base.CreateProperty(member, memberSerialization);

//See if we should serialize with the depth

var shouldSerialize = property.ShouldSerialize;

property.ShouldSerialize = obj => this.includeProperty() && (shouldSerialize == null || shouldSerialize(obj));

//Setter if private is okay to serialize

if (!property.Writable) {

var propertyInfo = member as PropertyInfo;

if (propertyInfo != null) {

var hasPrivateSetter = propertyInfo.GetSetMethod(true) != null;

property.Writable = hasPrivateSetter;

}

}

return property;

}

protected override IList<JsonProperty> CreateProperties(Type type, MemberSerialization memberSerialization) {

IList<JsonProperty> props = base.CreateProperties(type, memberSerialization);

var propertyToSerialize = new List<JsonProperty>();

foreach (var property in props) {

if (property.Writable) {

propertyToSerialize.Add(property);

} else {

var propertyInfo = type.GetProperty(property.PropertyName);

if (propertyInfo != null) {

var hasPrivateSetter = propertyInfo.GetSetMethod(true) != null;

if (hasPrivateSetter) {

propertyToSerialize.Add(property);

}

}

}

}

return propertyToSerialize;

}

}

public class CustomJsonTextWriter : JsonTextWriter {

public int CurrentDepth { get; private set; } = 0;

public CustomJsonTextWriter(TextWriter textWriter) : base(textWriter) { }

public override void WriteStartObject() { this.CurrentDepth++; base.WriteStartObject(); }

public override void WriteEndObject() { this.CurrentDepth--; base.WriteEndObject(); }

}

}

The problem is that even with those optimizations, the time can be long. One common pattern is that you have a big Entity Framework object that you want to serialize. Before sending the object to serialize, you want to cut some branches by setting to null properties. For example, if you have the main entity that has many collections, you may want to null the collection and just setting the object with less sub-objects into Redis. The problem is that if you null a property, the main object will have some missing data and your object is in a bad state. So, the pattern is to serialize the object once and deserialize it right away. Null some properties on that deserialized object, which is a total clone. Any changes doesn't affect the real object. From that clone, you can serialize this one and set it to Redis. The problem is that it takes 2 serializations operation and 1 deserialization while the best case scenario would be 1 serialization.

The pattern remains good, but the way to achieve it is wrong. A better approach would be to clone the object in C#. The benefit is the speed, the disadvantage is that you need to have cloning method on all your classes which can be time consuming. It's also difficult to know how to clone each object. Often you will need a shallow clone and a deep clone. Depending of the situation and the class, you need to call the right cloning method. The speed is varying a lot but on big cloning object where the graph is huge I saw result going from 500ms to 4ms. Very good for a first clone operation. After, cutting some properties and serializing again, the same object can take about 20ms to serialize.

Here is an example :

public Contest ShallowCloneManual() { var contest = (Contest)this.MemberwiseClone(); contest.RegistrationRules = this.registrationRules.DeepCloneManual(); contest.AllowedMarkets = this.AllowedMarkets?.ShallowCloneManual(); contest.ContestOrderType = this.contestOrderType?.DeepCloneManual(); contest.Creator = this.Creator?.ShallowCloneManual(); contest.DailyStatistics = this.DailyStatistics?.ShallowCloneManual(); contest.InitialCapital = this.InitialCapital.DeepCloneManual(); contest.Moderators = this.Moderators?.ShallowCloneManual(); contest.Name = this.Name.DeepCloneManual(); contest.TransactionRules = this.TransactionRules.DeepCloneManual(); contest.StockRules = this.StockRules?.DeepCloneManual(); contest.ShortRules = this.ShortRules?.DeepCloneManual(); contest.OptionRules = this.OptionRules?.DeepCloneManual(); contest.Portefolios = this.Portefolios?.ShallowCloneManual(); return contest; }

public Contest DeepCloneManual() { var contest = (Contest)this.MemberwiseClone(); contest.RegistrationRules = this.registrationRules.DeepCloneManual(); contest.AllowedMarkets = this.AllowedMarkets?.ShallowCloneManual(); contest.ContestOrderType = this.contestOrderType?.DeepCloneManual(); contest.Creator = this.Creator?.ShallowCloneManual(); contest.DailyStatistics = this.DailyStatistics?.ShallowCloneManual(); contest.InitialCapital = this.InitialCapital.DeepCloneManual(); contest.Moderators = this.Moderators?.DeepCloneManual(); contest.Name = this.Name.DeepCloneManual(); contest.TransactionRules = this.TransactionRules.DeepCloneManual(); contest.StockRules = this.StockRules?.DeepCloneManual(); contest.ShortRules = this.ShortRules?.DeepCloneManual(); contest.OptionRules = this.OptionRules?.DeepCloneManual(); contest.Portefolios = this.Portefolios?.DeepCloneManual(); return contest; }

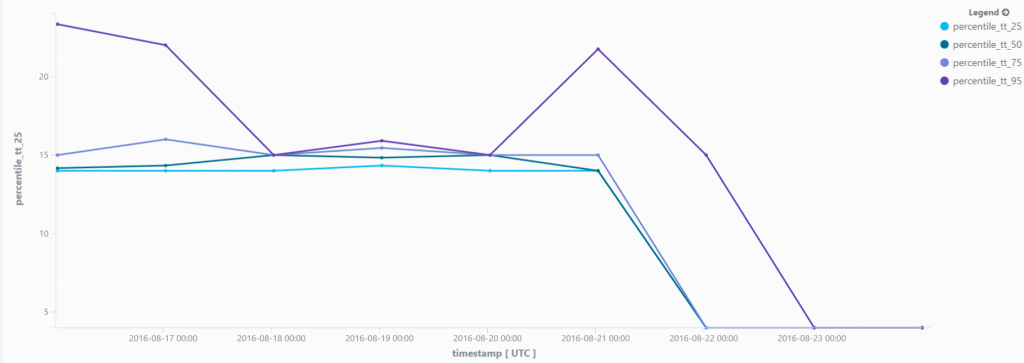

Some improvements could be done to be more generic. For example, DeepCloneManual could take a static option object which track the deep level and stop cloning. The impact of doing the cloning in C# was significant on Azure Webjob where thousand of objects needed to be reduced and send to Azure Redis. You can see by yourself the drop in the following graph where the 75th percentile get down from 16 minutes to less than 4 minutes and the 95th percentile from +20 minutes to 4 minutes.

To conclude, cloning by serializing and deserializing an Entity Framework object is expensive in term of processing but fast to use. It should be used with parsimony.