How to Transfer Files Between Computers Using HDMI (Part 7: Pagination)

Posted on: 2023-07-08

Transferring data using the HDMI cable and the capture card continues to demonstrate how easily corruption can cause the file to be unusable.

Let's review a few points that demonstrate that we have some inconsistancy around frames.

ffmpeg -f dshow -list_options true -i video="USB Video"

Shows vcodec=mjpeg. The codec is a compressed one, and we have tackled the issue using a less space efficient way, using black and white with more than a single pixel to hold the information.

ffmpeg -r 15 -f dshow -s 1920x1080 -vcodec mjpeg -rtbufsize 200M -i video="USB Video" -r 15 out_fps15_size10px.mp4

The command above uses a lower speed of 15 frames per second (fps) to ensure we give some pace to the whole process. Encoding the file in 15 fps was also required, but it still needs to be fixed. During the command, additional information like the following gives a hint that the fps is inconsistent on reading.

Input #0, dshow, from 'video=USB Video':

Duration: N/A, start: 613320.254228, bitrate: N/A

Stream #0:0: Video: mjpeg (Baseline) (MJPG / 0x47504A4D), yuvj422p(pc, bt470bg/bt709/unknown), 1920x1080, 15 fps, 15 tbr, 10000k tbn

Stream mapping:

Stream #0:0 -> #0:0 (mjpeg (native) -> h264 (libx264))

[libx264 @ 000001cd3023be80] using cpu capabilities: MMX2 SSE2Fast SSSE3 SSE4.2 AVX FMA3 BMI2 AVX2 AVX512

[libx264 @ 000001cd3023be80] profile High 4:2:2, level 4.0, 4:2:2, 8-bit

[libx264 @ 000001cd3023be80] 264 - core 164 r3106 eaa68fa - H.264/MPEG-4 AVC codec - Copyleft 2003-2023 - http://www.videolan.org/x264.html - options: cabac=1 ref=3 deblock=1:0:0 analyse=0x3:0x113 me=hex subme=7 psy=1 psy_rd=1.00:0.00 mixed_ref=1 me_range=16 chroma_me=1 trellis=1 8x8dct=1 cqm=0 deadzone=21,11 fast_pskip=1 chroma_qp_offset=-2 threads=24 lookahead_threads=4 sliced_threads=0 nr=0 decimate=1 interlaced=0 bluray_compat=0 constrained_intra=0 bframes=3 b_pyramid=2 b_adapt=1 b_bias=0 direct=1 weightb=1 open_gop=0 weightp=2 keyint=250 keyint_min=15 scenecut=40 intra_refresh=0 rc_lookahead=40 rc=crf mbtree=1 crf=23.0 qcomp=0.60 qpmin=0 qpmax=69 qpstep=4 ip_ratio=1.40 aq=1:1.00

Output #0, mp4, to 'out_fps15_size10px.mp4':

Metadata:

encoder : Lavf60.3.100

Stream #0:0: Video: h264 (avc1 / 0x31637661), yuvj422p(pc, bt470bg/bt709/unknown, progressive), 1920x1080, q=2-31, 15 fps, 15360 tbn

Metadata:

encoder : Lavc60.3.100 libx264

Side data:

cpb: bitrate max/min/avg: 0/0/0 buffer size: 0 vbv_delay: N/A

frame= 674 fps= 15 q=27.0 size= 221952kB time=00:00:44.80 bitrate=40585.5kbits/s speed=0.978x

Zooming toward the encoding portion, we see that there is a mix of mjpeg and h264. At the moment, I only suspect there is more compression than anticipated but we should be cover.

Stream #0:0: Video: mjpeg (Baseline) (MJPG / 0x47504A4D), yuvj422p(pc, bt470bg/bt709/unknown), 1920x1080, 15 fps, 15 tbr, 10000k tbn

Stream #0:0 -> #0:0 (mjpeg (native) -> h264 (libx264))

Stream #0:0: Video: h264 (avc1 / 0x31637661), yuvj422p(pc, bt470bg/bt709/unknown, progressive), 1920x1080, q=2-31, 15 fps, 15360 tbn

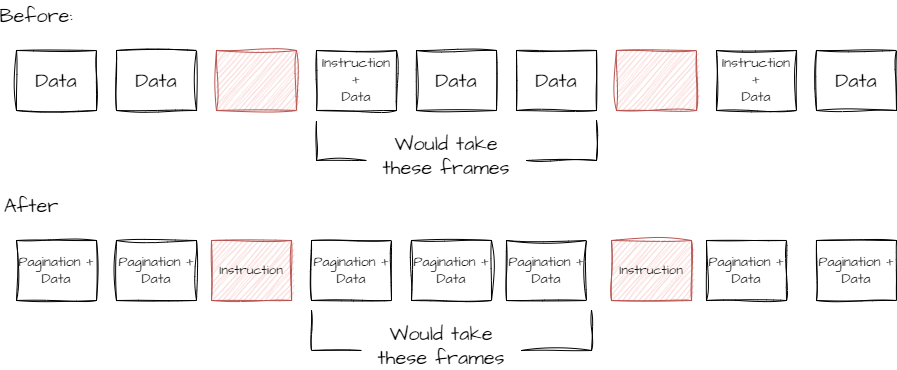

To this point, outside compression that might cause issue there might be another detail escaping us. One hint is the fps of the capture in ffmpg. An hypothesis might be that we are missing some frames. Even missing a single frame will cause the whole file not to be readable. We now rely on a single red frame to indicate the starting point. After the red frame, we assume the next frame is the first one with the instruction frame that gives the number of bytes for the actual content. However, what happens if the frame transfer after the red one fails to be transmitted or read? Also, there is no way to ensure we read all the frames.

Adaptation: Pagination

The next idea is to add a reserved 64 bits of space to every frame, similar to the instruction. Also, modifying the instruction to be the first 64 bits of the red frame. The reason is that once we figure out the structure to be the starting one (the red one) we do not need to rely on the next frame to be the instruction -- the red frame will always have the instruction. Thus, we can still know the total amount of bytes to recuperate, but now we can do it more reliably.

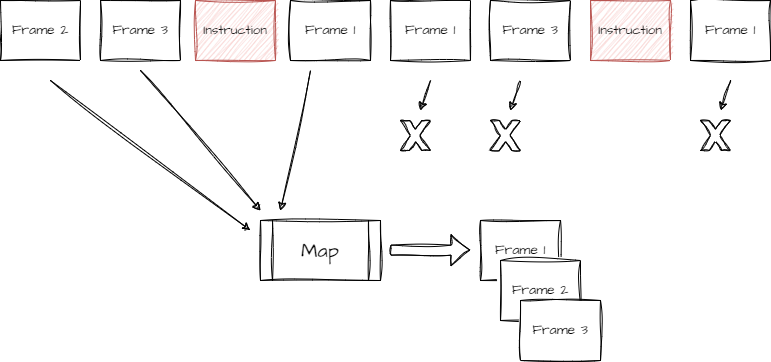

The central concept remains that we generate one starting (red) frame and then the frames for the data. However, With the addition of the pagination, we can read (watch) the whole video several times and use a dictionary (hash map) to accumulate the missing frames on each pass. If we notice missing frames after capturing the footage twice, we could continue to loop the video for longer and see if we can collect all frames.

The illustration shows that the first time the system reads the video, it receives frame #2. It saves it into the map. Then, it captures frame #3. Because the map does not have frame #3, it adds it. Then the instruction is read, telling it needs x amount of bytes. Then, it continues to read and capture frame #1. The order does not matter. The program continues to read frames, but they are all already there, thus does not save anything until the capture is over. On completion, it knows that frame 1 is the first, and so on, until it cannot find the data. In that case, fetching frame #4 would return nothing, which signals to stop concatenating the frame's byte. Finally, the stop is to look at the instruction to know the number of relevant bytes to build the original file.

This new solution should fix the current limitation of the algorithm.

Testing Locally

The modification worked perfectly locally. I added integration tests in Rust which is a matter of creating a folder called tests at the project's root. To run only the integration test, we call cargo test --test "*". Generating the video and reading it back using the Rust program works well. A small change visually in the video is that the frame does not use any character and thus is null for a frame that is not full of data. Similarly, the starting red frame now contains some white and black in the first 64 pixels (if the size is set to 1). It is the data, including the number of bytes to extract.

Testing with Remote Computer

The ultimate test is to generate the video on a remote machine, play the video and extract using FFmpeg. The same steps as before are required with FFmpeg.

The first test was with a size of 2 using a zip file encoded at 30fps. With the new pagination mechanism, if FFmpeg cannot read at 30fps all frames (some are skipped), the whole sequence would be there within a few loops. I let the FFmpeg loops about 5 times and the result was a video of about 80 megs. However, the code that is reading the video was breaking after two frames.

pub fn video_to_frames(extract_options: &ExtractOptions) -> Vec<VideoFrame> {

let mut video = VideoCapture::from_file(&extract_options.video_file_path, CAP_ANY)

.expect("Could not open video path");

let mut all_frames = Vec::new();

loop {

let mut frame = Mat::default();

video

.read(&mut frame)

.expect("Reading frame shouldn't crash");

if frame.cols() == 0 {

break; // <------------------- Here

}

let source = VideoFrame::from(frame, extract_options.size);

match source {

Ok(frame) => {

all_frames.push(frame);

}

Err(err) => {

panic!("Reading from VideoFrame: {:?}", err);

}

}

}

all_frames

}

After trying several differen ways to open the video like using the ffmpeg wrapper for Rust I was unsuccessful. The video produced can be open with software like VLC but the Open CV couldn't. The ffmpeg (using the ffmpeg_next) was not compiling after 30 minutes of try so I gave up.

Codec

The command to read the video stream from the video card uses the -vcodec mjpeg. As discussed in a previous post, this is because the ffmpeg specify that this is what the device is providing.

ffmpeg -f dshow -list_options true -i video="USB Video"

[dshow @ 0000022277b00100] vcodec=mjpeg min s=1920x1080 fps=10 max s=1920x1080 fps=60.0002

[dshow @ 0000022277b00100] vcodec=mjpeg min s=1920x1080 fps=10 max s=1920x1080 fps=60.0002 (pc, bt470bg/bt709/unknown, center)

[dshow @ 0000022277b00100] pixel_format=yuyv422 min s=1920x1080 fps=5 max s=1920x1080 fps=5

[dshow @ 0000022277b00100] pixel_format=yuyv422 min s=1920x1080 fps=5 max s=1920x1080 fps=5 (tv, bt470bg/bt709/unknown, topleft)

However, we could convert into a different format when saving to the file which could be open by Open CV.

While the codev and pixel format were good values compared to the Windows ffmpeg commands below:

ffmpeg -codecs > codec.txt

ffmpeg -pix_fmts > pix.txt

The command to read the capture card kept giving me buffer size limit issue or setting issues. I decided to avoid codec with raw data. It does create a huge file but I was able to read the file using VLC!

ffmpeg -f dshow -video_size 1920x1080 -i video="USB Video" -rtbufsize 200M -framerate 30 -c:v copy output.mp4

Using the Rust code on the raw output worked! The raw 167 megs of under 12 seconds generated a perfect 2 meg image in 3 minutes 49 seconds. The same as the source.

Let's use a codec and see how we can optimize the overall transformation time.

ffmpeg -f dshow -video_size 1920x1080 -i video="USB Video" -rtbufsize 200M -framerate 30 -c:v copy input.mp4

ffmpeg -i input.mp4 -c:v libx264 -crf 23 -preset medium -c:a aac -b:a 128k output.mp4

The time was not better (I stopped the Rust script after 5 minutes, it wasn't even at 25%) and actually added potential issue with the compression. Also, while running the second command, the console is flooded with the following error. Still, the file can be read by VLC and by the Rust program.

[mjpeg @ 0000025116f78740] Found EOI before any SOF, ignoring [mjpeg @ 0000025116f78740] No JPEG data found in image Error while decoding stream #0:0: Invalid data found when processing input

No Compression, 1 size pixel zip file

The ultimate test was to use a zip file with the most black and white information density of each bit using 1 pixel.

ffmpeg -f dshow -video_size 1920x1080 -i video="USB Video" -rtbufsize 200M -framerate 30 -c:v copy input_zip.mp4

cargo run --release -- -m extract -i outputs/input_zip.mp4 -o outputs/PictureFiles.zip --fps 30 --height 1080 --width 1920 --size 1 -p true -a bw

I let the recording watch the video twice. It produced a file of 328 megs (16 seconds). The Rust script ran for 1 minute and 6 seconds an failed with missing frame:

We have not receive all frames. We received 6 frames for a total of 1555152 bytes and we expected 54347552 bytes

I captured again, this time let about 12 iterations of the movie to be recorded. I could see my personal laptop that was running the video being not smooth and the fan running super hard. I suspect frames are skipping as visually I can see some freeze. The generated file was about 850 megs. Unfortunately, the same 6 frames were missing.